Carolyn Hill and Barbara Condliffe

Researchers and funders often want to know not just whether a social program works, but how and why—the terrain of implementation research. Here, Carolyn Hill and Barbara Condliffe present posts from contributors inside and outside MDRC, offering lessons from past program evaluations and insights from ongoing studies that can improve research approaches.

Short-term findings from implementation research that is part of a larger impact study can reveal insights that are valuable to both program operators and researchers. This blog post describes an example from a collaboration between MDRC and a high school reform organization.

A Resource for Organizational Leaders Participating in Research Studies

What COVID-19 Adaptations We Will Take with Us, and What We Will Gladly Leave Behind

With the onset of the pandemic, MDRC implementation researchers halted travel to programs and transitioned their work to virtual modes. This blog post offers reflections on adaptations they made that are worth continuing post-pandemic and on the in-person practices they look forward to resuming.

Implementation researchers can be good partners to program operators at this difficult time — by being sensitive to the new constraints that programs face, by assessing how learning agendas and evaluation plans need to change, and by helping programs learn from the adaptations they are making in response to the pandemic.

As the coronavirus pandemic unfolds, researchers are considering the implications of moving on-site data collection with program staff and participants to virtual settings. This post from the Implementation Research Incubator offers advice about switching from in-person focus groups to virtual focus groups.

Profiles of Three Partnerships

MDRC designs its implementation research to inform ongoing program practice. In this short video, leaders from three programs describe their work with MDRC: Heather Clawson from Communities In Schools, Monica Rodriguez from Detroit Promise Path, and Karen Pennington from Madison Strategies.

What constitutes rigor in qualitative research is a conversation we’d like to advance. In our recent article in the Journal of Public Administration Research and Theory, we explore this notion in the context of public management. The conversation is also of central concern to the field of program evaluation, which has been a leader in advancing both qualitative and mixed methods. We’re especially interested in engaging consumers of qualitative research who are more familiar with designing, conducting, or interpreting social science research that uses quantitative methods.

The past year was another active one for implementation research at MDRC. In this post, we highlight some of our activities and preview what’s in store for 2019.

A “One-Page Protocol” Approach

In a previous Incubator post, our colleagues pointed out that leading effective focus groups for implementation research requires clarity about the critical topics to explore. Our experience conducting the focus groups for our evaluation of PowerTeaching illustrates how we targeted a few key topics while at the same time encouraging conversational flow. We prepared a framework in advance to help us analyze the information we gathered during the sessions.

MDRC’s implementation research (IR) is a team effort. IR takes careful planning and coordination among internal team members, no matter whether the project is short term or long term, small or large in scope, informing early program development or assessing an established intervention’s fidelity to its model, standing on its own or embedded in a larger study. It’s a multidisciplinary exercise that draws on diverse staff expertise and a range of information.

Treatment contrast — the difference between what the program group and control group in an evaluation receive — is fundamental for understanding what evaluation findings about the effects of a program actually mean. There’s no strict recipe for measuring treatment contrast, because each study and setting will have nuances. And while “nuance” can’t be an excuse for ignoring treatment contrast or for an anything-goes approach to it, there’s surprisingly little detailed and practical guidance about how to conceptualize and measure it. We offer some pertinent considerations.

Rural school districts in low-income communities face unique challenges in preparing and inspiring students to go to college. As part of a federal Investing in Innovation (i3) development grant, MDRC supported and evaluated an initiative that sought to improve college readiness in rural schools, in part by aligning teaching strategies across grade levels and between schools and the local college. Our implementation research allowed us to provide formative feedback that program leaders used to improve the process.

As part of our study of Making Pre-K Count, an innovative preschool math program, we conducted in-depth qualitative research to better understand how the program produced its effects. The program has several instructional components that are directly measurable, but one key process-related ingredient in the program’s theory of change is less well understood — that is, teachers’ ability to differentiate their instruction to individual students’ needs. What can we learn about a main intervention component that is challenging both to implement and to document?

How do we build evidence about effective policies and programs? The process is commonly depicted as a pipeline: from developing a new intervention to testing it on a small scale, to conducting impact studies in new locations, to expanding effective interventions. But an updated depiction of evidence building could better reflect realities of decision making and practice in the field. We describe a cyclical framework that encompasses implementation, adaptation, and continued evidence building. Implementation research takes on a central role in this updated model.

Implementation research in program evaluations plays a critical role in helping researchers and practitioners understand how programs operate, why programs did or did not produce impacts, what factors influenced the staff’s ability to operate the intervention, and how staff members and participants view the program. Often, implementation research does not directly address scale-up questions — whether, when, and how effective programs can be expanded — until decision makers and evaluators are at the cusp of considering this step. Yet implementation research from the early stages of evidence building can be harnessed to inform program scale-up later on.

We’re getting in the “year-end wrap-up” spirit a bit early here at the Incubator. In this post, we highlight some implementation research activities at MDRC over the past year and preview what’s in store for 2018.

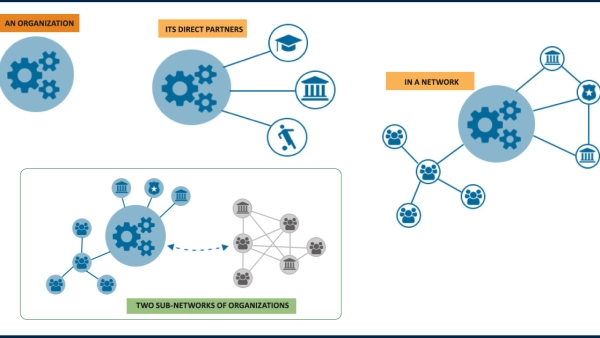

For implementation research, measuring how community networks are deployed to deliver programs means taking seriously their specific characteristics and how these help or hinder local programs. In other words, the field should become better at reading networks. Our mixed-methods Chicago Community Networks study shows how we go about this.

A growing number of public, nonprofit, and for-profit organizations, in an effort to target resources effectively and efficiently, are turning to predictive analytics. The idea of predicting levels of risk to help organizations identify which staff members or clients need support appeals to program managers and evaluators alike. MDRC has been working with organizations to develop, apply, and learn from predictive analytic tools. A key insight from our application of predictive analytics is its power to jump-start conversations about program implementation and practice, suggesting that it will become increasingly important for implementation researchers to understand.

Insights from Qualitative and Quantitative Analyses of the PACE Center for Girls

The culture of a program, also known as the program environment, is often of great interest in social services. Many researchers and practitioners view program culture or environment as a key aspect of service delivery and as a potential influence on participant outcomes. Staff members sometimes refer to their program environment as the “secret sauce” that no one knows exactly how to replicate. How, then, can researchers measure program environment and understand how it develops?

Interviews or Focus Groups?

As implementation researchers, we often want to hear directly from program staff members and program participants in order to understand their perspectives and experiences. Gathering these perspectives can involve one-on-one interviews or focus groups — but which approach is more appropriate? We summarize a few key considerations for conducting interviews or focus groups for implementation research.

The Multistate Evaluation of Response to Intervention Practices

When schools or programs face challenges in delivering services — such as limited time — and researchers are not on-site to monitor their implementation, how can the researchers know what is happening and how it varies across sites? MDRC’s evaluation of the Response to Intervention reading framework highlights ways to document how schools use their time and which students receive services.

How are evidence-based practices — approaches to organizing and delivering services that have been rigorously evaluated — implemented within organizations that deliver many services, some of which are not evidence-based? As part of our implementation study of the Children’s Institute, Inc., a multiservice organization in Los Angeles working with low-income children and families, we studied how the staff integrated evidence-based practices into its services, and the challenges that arose along the way.

Researchers studying education, youth development, or family support interventions sometimes encounter situations where the program staff is adjusting and adapting program components. Sometimes this is done to fit the needs of clients or budgets. Adaptations also may be made during multiyear programs and studies, for reasons such as staff turnover and budget changes. Such adaptations are likely to occur whether or not sites in a randomized controlled trial are receiving specific implementation guidance.

Instead of viewing adaptation only as an impediment to treatment fidelity, a nuisance that must be managed, we’ve been thinking about how we can anticipate these adaptations in our implementation research.

Welcome to MDRC’s Implementation Research Incubator! We’re glad you’re here. Our monthly posts aim to inform implementation research in social policy evaluations, through

-

sharing ideas about implementation research data, methods, analysis, and findings

-

fostering development of ideas and insights about implementation research

-

integrating understanding across policy domains and academic disciplines

-

advancing transparent, rigorous implementation research

-

informing implementation practice and scale-up of evidence-based programs